“WITHOUT BIG DATA ANALYTICS, COMPANIES ARE BLIND AND DEAF, WANDERING OUT ONTO THE WEB LIKE DEER ON A FREEWAY” – GEOFFREY MOORE

While reaching the trend as a topic that needs to be followed in terms of evolution, interest and possible future scenarios, Big Data is being watched by multinational corporations and large companies, that have decided to justify its importance with the creation of ad hoc executive roles. In addition, small and medium-sized enterprises also decided to keep an eye on Big Data.

WHERE DOES THIS ATTENTION FOR BIG DATA COME FROM?

Following Geoffrey Moore – american management consultant and author- “Without big data analytics, companies are blind and deaf, wandering out onto the web like deer on a freeway”.

The dreaded feeling of wandering without purpose is rising, since Big Data represents the: “raw material of business activities”, to quote Craig Mundie, Senior Advisor of Microsoft’s CEO.

Therefore, we know that Big Data are essential for the current development, but mostly, for the future of companies. Why is that?

To paraphrase the indian writer Ankala V. Subbarao: “Analyzing data means providing information; reworking information means creating consciousness and reworking consciousness means giving birth to wisdom”.

Hence, it is clear that the data and information derived from Big Data are the source from which true knowledge is born: a cultural heritage that is not based on opinions but on statistics and algorithms that change based on the input they receive.

And here it is, the necessity to understand every aspect of the world in which companies work using Big Data, collecting all those information which are not so easily identified, to steer businesses towards more strategic and accurate goals.

To realize this vision, Interlogica has decided to host a workshop to share what the company has brought to the market.

Before delving into our strategic approach, let’s talk about the history of Big Data.

WHAT’S BIG DATA? WHERE DOES IT COME FROM? WHO INVENTED IT?

Big Data. Two terms that do not seem to refer to anything in particular. However, in the last two decades they shaped the collective consciousness, by instilling a quick association with volumes of informations that the human mind cannot conceive or understand.

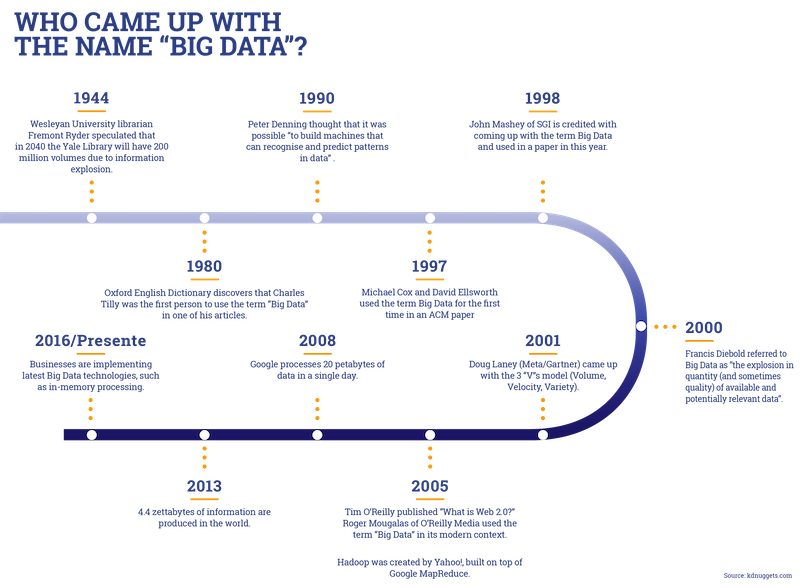

We got closer to the concept with a 1944 research, led by Freemont Rider, the Wesleyan University librarian. The research introduced the concept of Information Explosion.

Specifically, the research affirmed “that American university libraries were doubling in size every sixteen years. Given this growth rate, Rider speculated that the Yale Library, by 2040, would have required a cataloging staff of over 6,000 people and more than 6,000 miles of shelves”

Rider, however, didn’t consider the digitization of libraries. This is probably why he talked about an information assault, and to that I would add, a paper-based assault.

From this point onwards, until the 2000s, a series of publications, speeches and presentations made by professional researchers from different sectors have followed one another, conveying a more persuasive view of Big Data and linking it closely with its quantitative and qualitative characteristics.

The beginning of the new millennium experienced the reaching of a milestone in the understanding of this concept. Appearing for the first time on the Times newspaper, Francis Diebold referred to “Big Data the explosion in the quantity (and sometimes, quality) of available and potentially relevant data”.

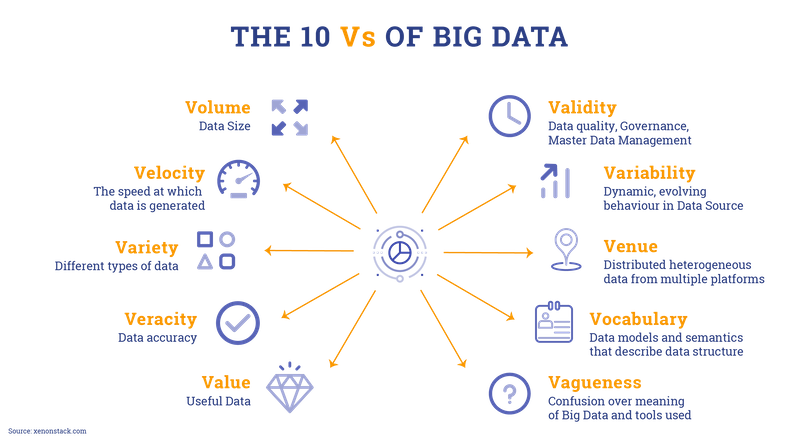

During the following year, Big Data acquired even more importance thanks to the work of Dough Laney who talked about its various properties: the famous 3Vs.

- Volume describes how the Big Data unit of measurements has moved from Terabytes to Zettabytes.

- Velocity refers to the speed at which data is generated.

- Variety is used to refer to the data format, particularly the transition from structured to unstructured.

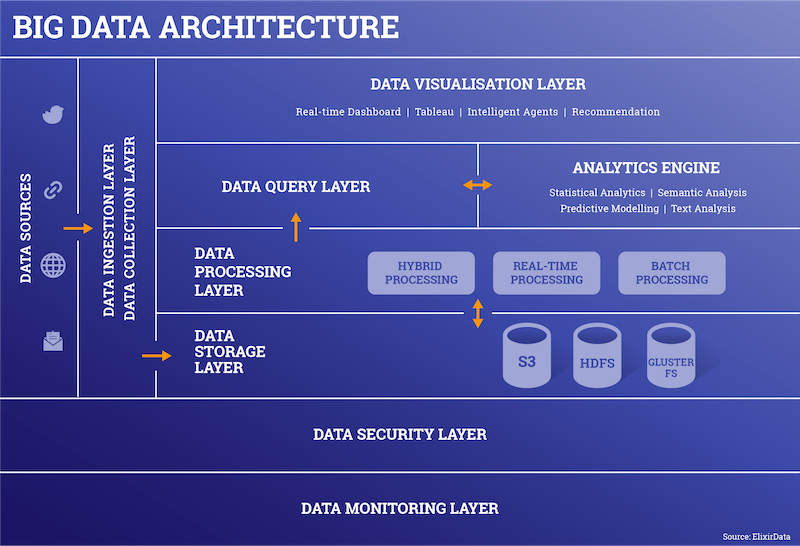

Since Big Data comes from different types of sources, it’s difficult to connect and extract such data and try to identify the variations – these 3 phases constitute the Big Data Ingestion -. Basically, the analysis refers to the conveyance of data from the place in which it has been generated to a more accessible system that is able to store and analyze them.

Recent studies have identified a fourth V: that of data Veracity when compared to other types of data which have been found to be inconsistent, creating ambiguity and approximation.

Recently, however other Vs have been identified, reaching a total of 10.

THE TURNING POINT

From these considerations on the various characteristics of Big Data, a number of companies began to give importance to Big Data and its practical use. However, their demands weren’t satisfied until 2005, which is the second milestone in the history of Big Data.

2005 is the year in which Apache Hadoop appeared on the market. This framework supports web applications that can work with data amounting to petabytes.

It’s a new development for Big Data: Hadoop embodies the practical answer to the necessity of a tangible application that can be integrated in the Market’s different sectors.

Credit and insurance organizations were the first to show interest. The former needed to better evaluate the creditworthiness and its granting concessions; the latter needed to adequate the insurance premium to the real risk of customers causing accidents.

The potential to restore the balance, especially in the case of the insurance premium, thanks to the precise analyses that Big Data allowed inside these two sectors, found a propulsive thrust with the introduction of the Internet of Things (IoT) on the market.

Currently, the companies of the energy sector are strongly directed towards the integration of the IoT with Big Data.

The IoT technology can find an easier application if it supports Big Data.

The IoT requires a large number of sensors – or physical objects – that intercept every type of data and transmit it to a control panel which analyzes and updates the collected information in real-time. This type of technology introduces the concept of Real Time Analytics, namely, the possibility to access data from the moment they are created/received.

THE STRATEGIC VISION

This opportunity has granted Interlogica the possibility to be at the center of a strategic vision that prospects the exchange of valuable data and information with the clients of B2B channel companies, with whom it establishes commercial relations, at any given time.

The transition from Data Analytics to Real Time Analytics, which was quickened by the IoT as well, highlights a crucial phase in the analysis and elaboration of data – Big Data – because it provides swiftness and accuracy to predictive statistics, when compared to the older method of Data Mining (a series of techniques directed towards the extraction of information starting from large amounts of data, which is then analyzed in order to associate different variables to single individuals).

Undoubtedly, Real Time data analysis is an advantage for all those firms that want to use innovative trends to try and be always at the head of the sector.

By merging the adoption of Real Time Analytics on Big Data with up-to-date Business Intelligence (BI) tools means being able to keep the pole position in the Market, but it doesn’t stop there. Another important advantage given to players is the ability to easily cross-question Multi-vendor Big Data structures with Data Lake structures.

This goal can be easily reached thanks to the fruition of intuitive end-to-end solutions with advanced functions, directed to analysts: adequate tools viable for both the experts of data analysis but also for the less experienced ones, that may have a commercial background, but that, with the use of these tools, might be able to orient the business in a certain direction.

Seizing this opportunity means competing with a strong advantage on the splitting up of a market filled with potential, a source that cannot be ignored by companies, also because this new opportunity has not been fully explored by all the players yet.

Our firm understood the value behind the close link between IoT and Big Data, but most of all, we believe in the intrinsic value of linking a powerful BI front-end tool with Real Time Analytics on different Big Data/Data Lake sources.

For this reason, we offer solutions as well as in-depth analysis moments, which enable our clientele to direct their business growth on objective and meaningful data, while also focusing on particular data that is not always so easily perceived.